MusicGAN

Automatic generation of audio samples using Generative Adversarial Networks.

---

Aim: To generate new music from old music.

Advisor: Prof. Dr. Kishor Uplah

Team members: Dharmik Bhatt, Insiyah Hajoori

Dataset: GTZAN form Kaggle.

---

MusicGAN is a research project my team and I had been working on under Prof. Dr. Kishor D. Uplah of the Electronics Engineering Department.

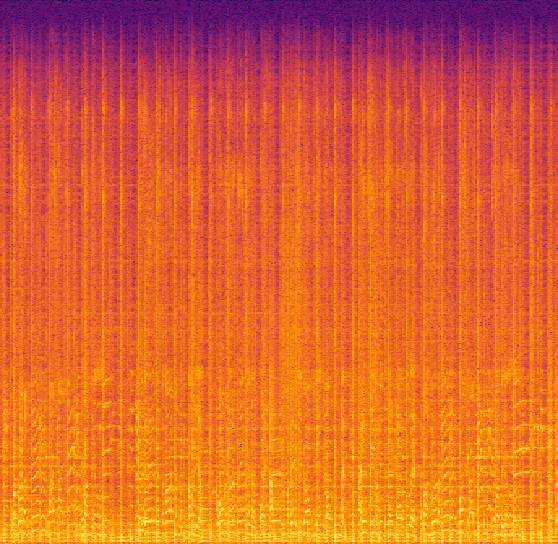

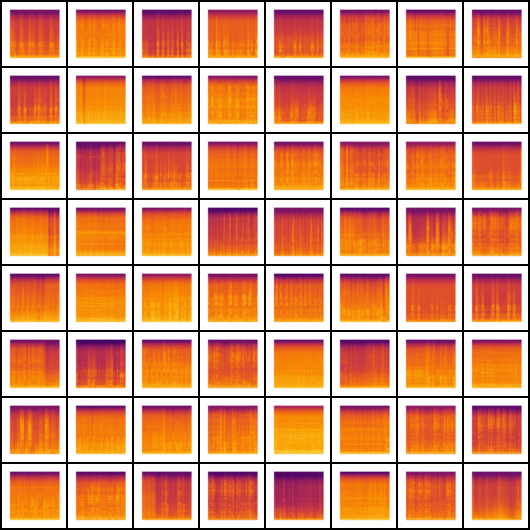

Our goal is to create new music without having to actually create it ![]()

This Project deploys Generative Adversarial Networks (GANs) with an aim to generate New Music from Old Music using PyTorch.

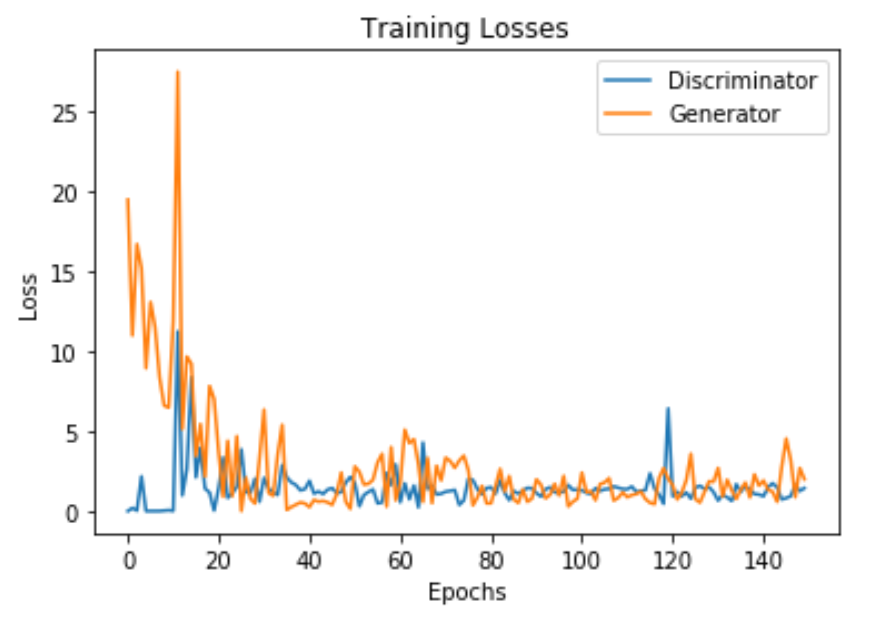

Among the plethora of my learnings from this project, the most significant of them were the working of GANs. Majority of my work revived around understanding these Networks and Tweaking parameters in my code to get desired results. This has also led to an increase in knowledge about the working of Convolutional Neural Networks which form the fundamental for Image Processing. I have become more confident with my usage of PyTorch over the course of building this project. I plan to deploy this project in TensorFlow to compare the two frameworks and to gain a better idea of how my Time and Space complexity would improve. I have spent a lot of time with libraries for Computer Vision and Audio Processing such as Librosa, Matplotlib, SciPy, etc.

Here is the GitHub Link ot our project. More info on the project pipeline is restricted. Please reach me out on email.