ColorizeGrayscale

Colorization of grayscale images using Generative Adversariral Networks.

---

Aim: To colorize grayscale images and understand the factors that infulence coloring with GANs.

Advisor: Prof. R.P. Gohil

Team members: Ashna Arora, Shubham Yadav, Bhaskar Rabha

Datasets: COCO, CelebA and Places265

---

With the increase in advancements of technology in the modern era, the amount of digital content has grown at a massive rate. However, a lot of media and artifacts dated to the monochrome era such as antique photography and black and white cinema have not been available to present day audience, due to its lack of visual appeal and have been disregarded as obsolete. Colorization techniques of black and white media relied heavily on human interaction requiring every single frame to be inspected wasting both time and money. In recent years there have been several attempts to colorize these grayscale images using both Artificial Neural Networks and Generative Adversarial Networks (GANs).

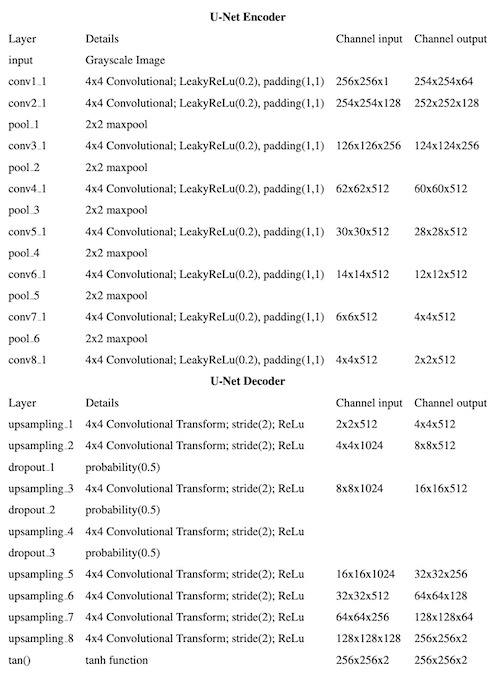

This project implementation aims to colorize grayscale images using a Conditional Generative Adversarial Network (cGAN). This project will make use cGAN with a U-net architecture to generate realistic and vibrant colorized grayscale images. cGAN is built in Keras. The condition provided to the cGAN is an additional loss function. This project is coded in Python 2.7. All computations are performed on Google Colaboratory using Cuda GPU for PyTorch.

Color Space

The CIE-LAB color space (also known as CIE Lab* or Lab color space) is a color space comprises 3 parts L, a and b. Here, L* is luminance form black(0) to white (100), a* represents values from green to red and b* represent values from blue to yellow. Lower values represent presence of green and higher values represent red. Similarly, lower values of b, represent blue and higher values represent yellow. Lab color space is widely used in image processing. Particularly in colorization of grayscale Images, Lab is used because of the fact that this color space minimizes effect of correlation i.e. they are independent and changes to one channel does not affect other channels.

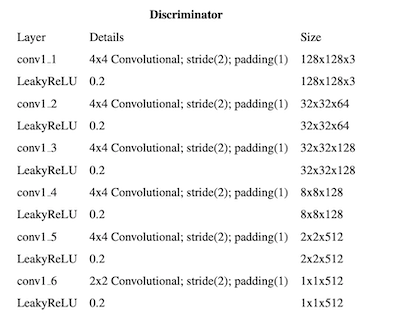

Conditional GAN model

Here is a screenshot of our report:

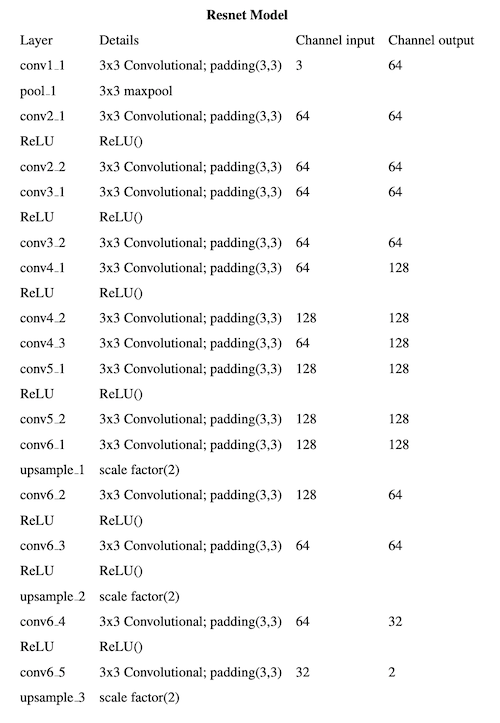

ResNet model

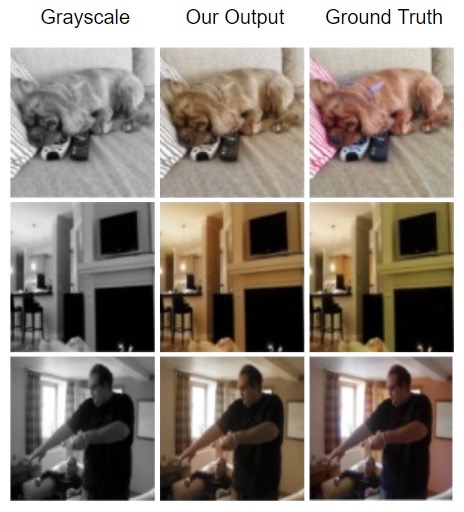

Results

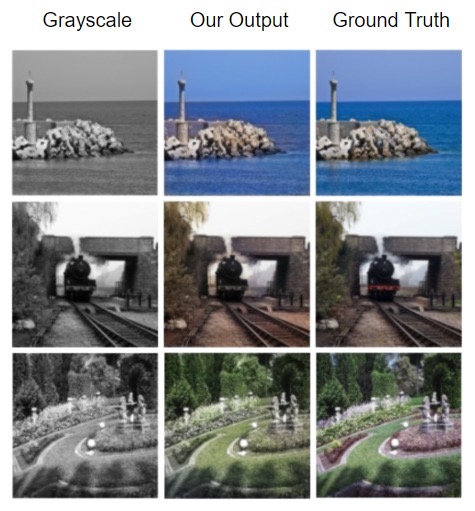

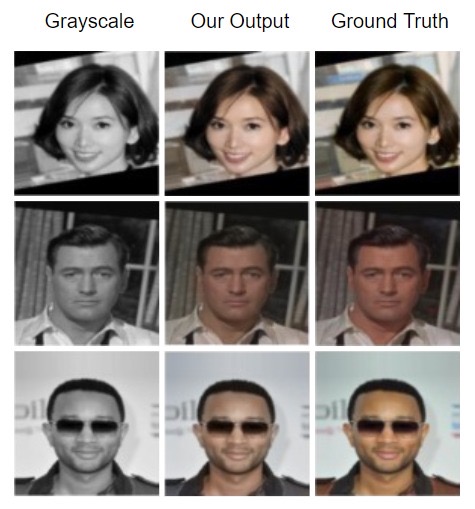

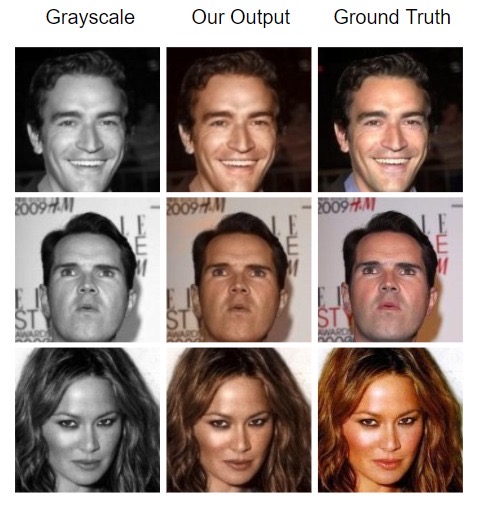

Conditional GAN results

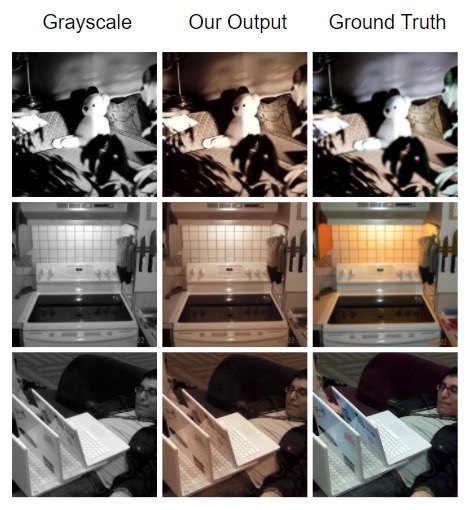

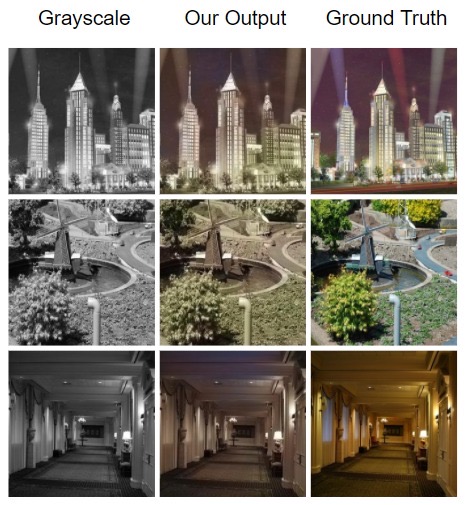

ResNet results

My team and I have also worked on a project report. The report covers the models, Color spaces, GAN working and available methods for colorizing extensively.